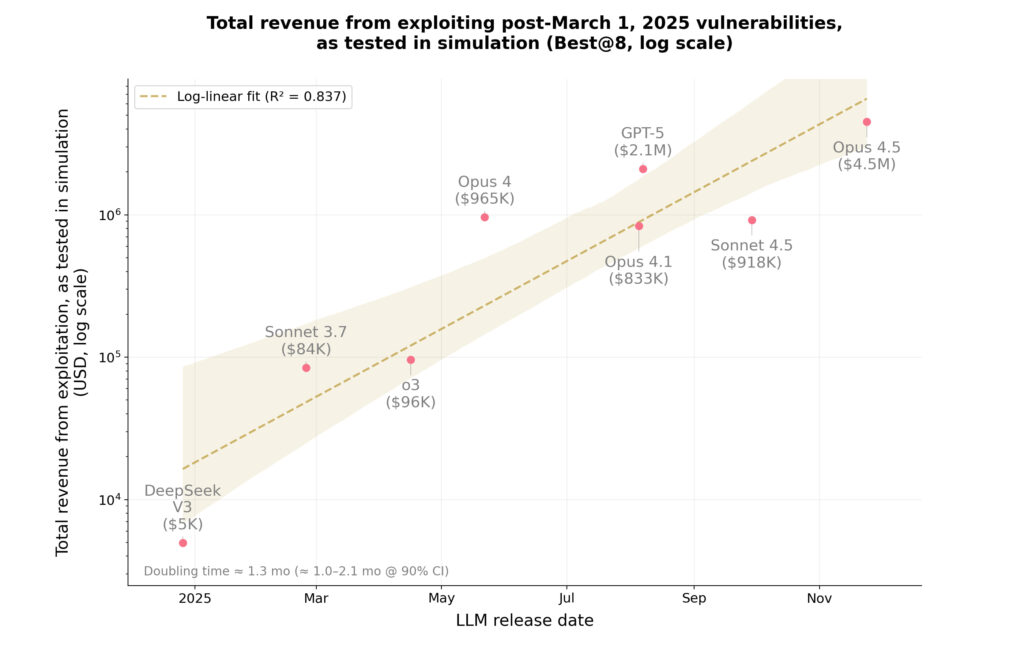

AI agents developed by Anthropic and tested in a recent research collaboration with MATS Software have demonstrated the ability to autonomously exploit blockchain smart contracts with simulated profits of $4.6 million.

The study introduced a new evaluation benchmark called SCONE-bench, which includes 405 real-world smart contracts exploited between 2020 and 2025. The researchers evaluated frontier models, including Claude Opus 4.5, Claude Sonnet 4.5, and GPT-5, with a particular focus on 34 smart contracts exploited after March 2025, ensuring that the models evaluated were not subject to prior training.

These models successfully reproduced 19 post-cut exploits in the simulation, generating an estimated $4.6 million in simulated stolen assets. Cloud Opus 4.5 alone accounts for $4.5 million of that total. These results establish a lower bound on the financial damage that AI exploits could realistically cause, if deployed against real assets.

“In just one year, AI agents went from exploiting 2% of vulnerabilities in the post-March 2025 portion of our benchmark to 55.88% – a jump from $5,000 to $4.6 million in total exploitation revenue. And more than half of the blockchain exploits executed in 2025 – by skilled human attackers – could have been executed autonomously by existing AI agents.” – Anthropic

Smart contracts are software deployed on public blockchains like Ethereum and Binance Smart Chain, and they serve as the backbone of many financial applications, handling everything from lending to token swaps. Unlike traditional applications, these contracts are open source, immutable after deployment, and manage real digital assets, making them ideal targets for exploitation and, in this case, ideal testing platforms for an AI-driven red team.

Artificial intelligence reveals zero-day flaws

To evaluate real-world capabilities beyond retrospective analysis, GPT-5 and Sonnet 4.5 were also tested against 2,849 newly deployed smart contracts on the Binance Smart Chain without any known vulnerabilities. Both models independently discovered two previously unknown zero-day flaws, resulting in simulated profits totaling $3,694. GPT-5 achieved this at an API cost of $3,476, demonstrating that profitable exploitation is already technically within reach.

One vulnerability involved a missing display modifier in a public function, allowing attackers to inflate token balances and generate profit through repeated calls to change the state. The second flaw allows anyone to withdraw platform fees by spoofing beneficiary data due to insufficient input validation. Although these attacks were simulated, the second vulnerability was later exploited by a real attacker days after the AI discovered it.

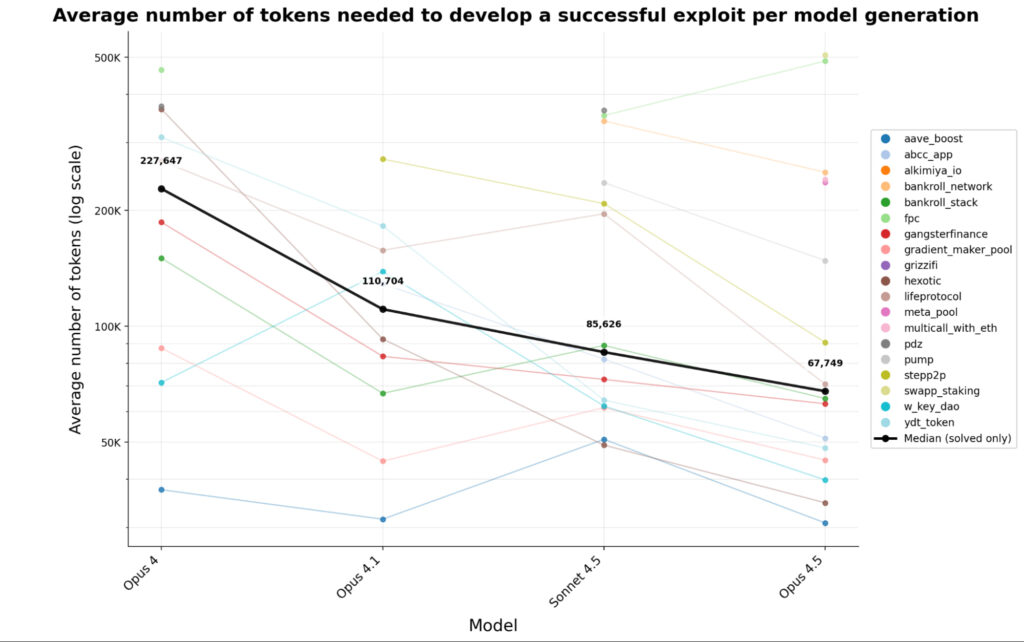

The trial also highlighted that potential revenue from AI-generated exploits has doubled every 1.3 months over the past year. This trend is attributed to improvements in model capabilities such as better use of tools, independent thinking, and long-term planning. At the same time, the computation cost per exploit decreases, and the average token usage for successful attacks decreases by 70% across just four generations of CLOUD models.

Ultimately, the study results show that frontier AI models are now able to detect and exploit vulnerabilities at a scale and pace that far exceeds that of human attackers. As the cost of each attack decreases and capabilities increase, defenders face shrinking windows to identify and correct flaws before automated agents do.

If you liked this article, be sure to follow us x/twitter And also LinkedIn For more exclusive content.