Key Insights

- ROVR is releasing an Open Dataset consisting of ~200,000 clips (synchronized snapshots combining imaging and LiDAR) via the ROVR Open Dataset, democratizing access to real-world 3D data.

- Existing models underperform when tested on ROVR’s data, demonstrating that current approaches don’t generalize to its challenging scenarios.

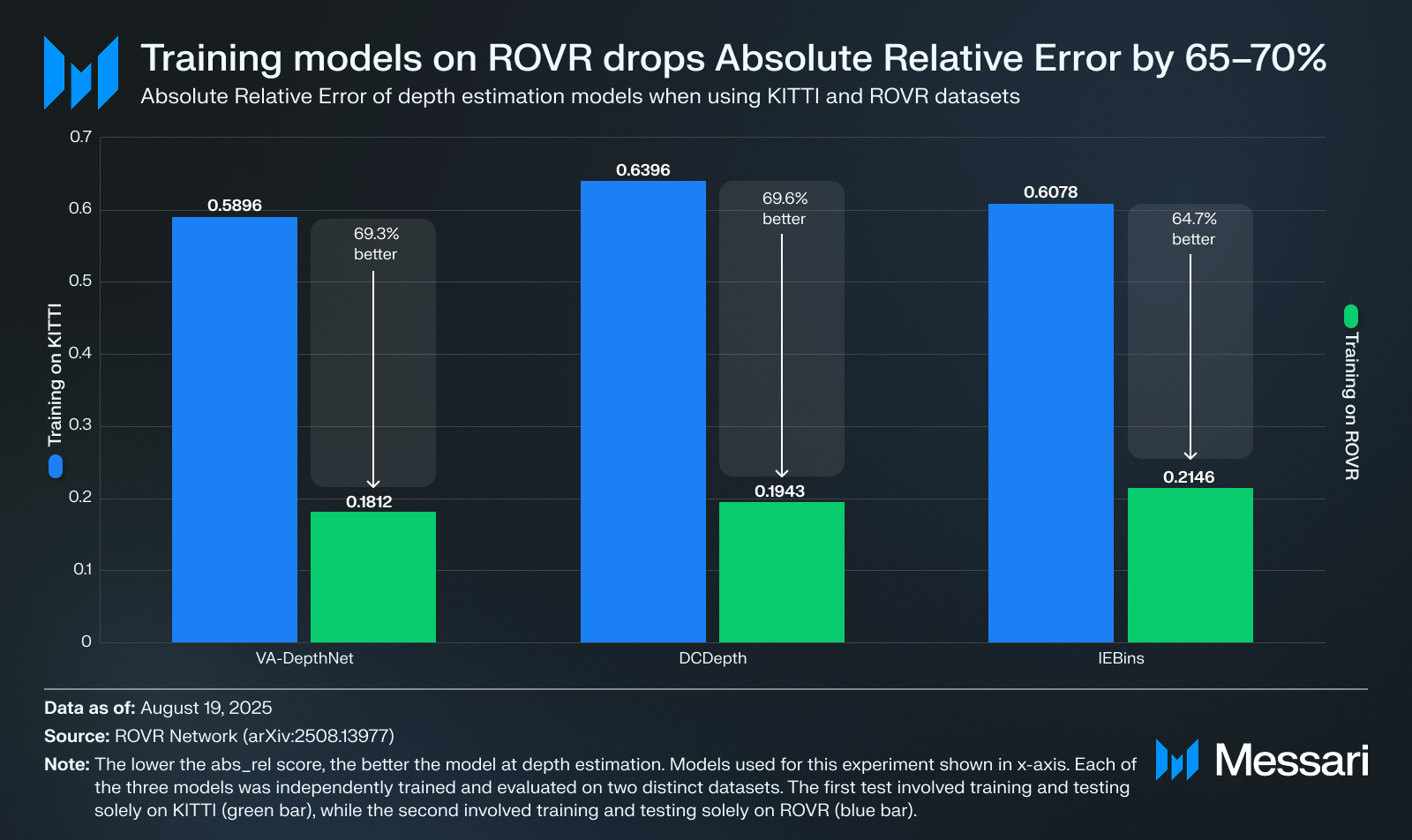

- Training on ROVR reduced absolute relative error scores across models, with VA-DepthNet, DCDepth, and IEBins down 69.3%, 69.6%, and 64.7% versus KITTI training, respectively.

- ROVR is launching a set of open challenges, one on predicting distance from a single camera image and another on finding and labeling objects using a camera plus LiDAR, accelerating spatial AI development through fostered collaboration.

Primer

ROVR Network (ROVR) is a decentralized physical infrastructure network (DePIN) dedicated to constructing a comprehensive geospatial data platform through specialized hardware and software solutions. Its mission is to collect and produce large-scale, highly accurate 3D geospatial and 4D spatiotemporal data from real-world environments, addressing the critical bottleneck in quality 3D data availability. This data is vital for training and deploying advanced systems like autonomous vehicles, robotics, and spatial artificial intelligence solutions. By democratizing access to critical resources traditionally monopolized by large corporations, ROVR empowers individual contributors to participate directly in the economic benefits of the AI-driven economy.

Data collection within the ROVR ecosystem relies on two specialized devices: TarantulaX (TX) and LightCone (LC). TarantulaX is a compact hardware device that mounts on the vehicle roof and links to a driver’s smartphone over Bluetooth. By feeding centimeter-level corrections from GEODNET, it turns everyday mobile video into accurate geospatial data. Meanwhile, LightCone is a roof-mounted sensor that pairs an automotive LiDAR, ADAS-grade camera, tri-band RTK satellite antenna, and high-precision IMU to capture centimeter-accurate 3D data. Users who contribute quality data are rewarded with ROVR tokens, incentivized based on factors including the amount of data collected (measured in mapping mileage), data quality, and frequency of road revisits.

The data gathered is subsequently transformed into high-definition (HD) maps that deliver centimeter-level precision and detailed environmental context, critical for applications such as autonomous vehicle navigation. ROVR’s 3D data generation tools support the training of advanced AI models, enabling precise scene editing and the creation of synthetic data based on actual real-world conditions.

Website / X / Discord / Telegram

The Geospatial Bottleneck

Training spatial AI solutions, like self-driving cars and humanoid robots, requires accurate and scalable geospatial data adaptable to edge cases. Depth shows how far a part of the image is from the camera. It’s a go-to metric because LiDAR measures those distances accurately and describes the 3D shape needed for navigation. Depth estimation involves measuring distances to roads, cars, people, and buildings across many places, times of day, and weather conditions. Depth is the basic signal these systems use to decide where they can and cannot go.

Today, most high-quality geospatial depth data is held by a few companies. Public datasets exist, but they are either small, narrow, or expensive to reproduce. This creates a data bottleneck: models learn a handful of routes very well, then falter when the scene shifts.

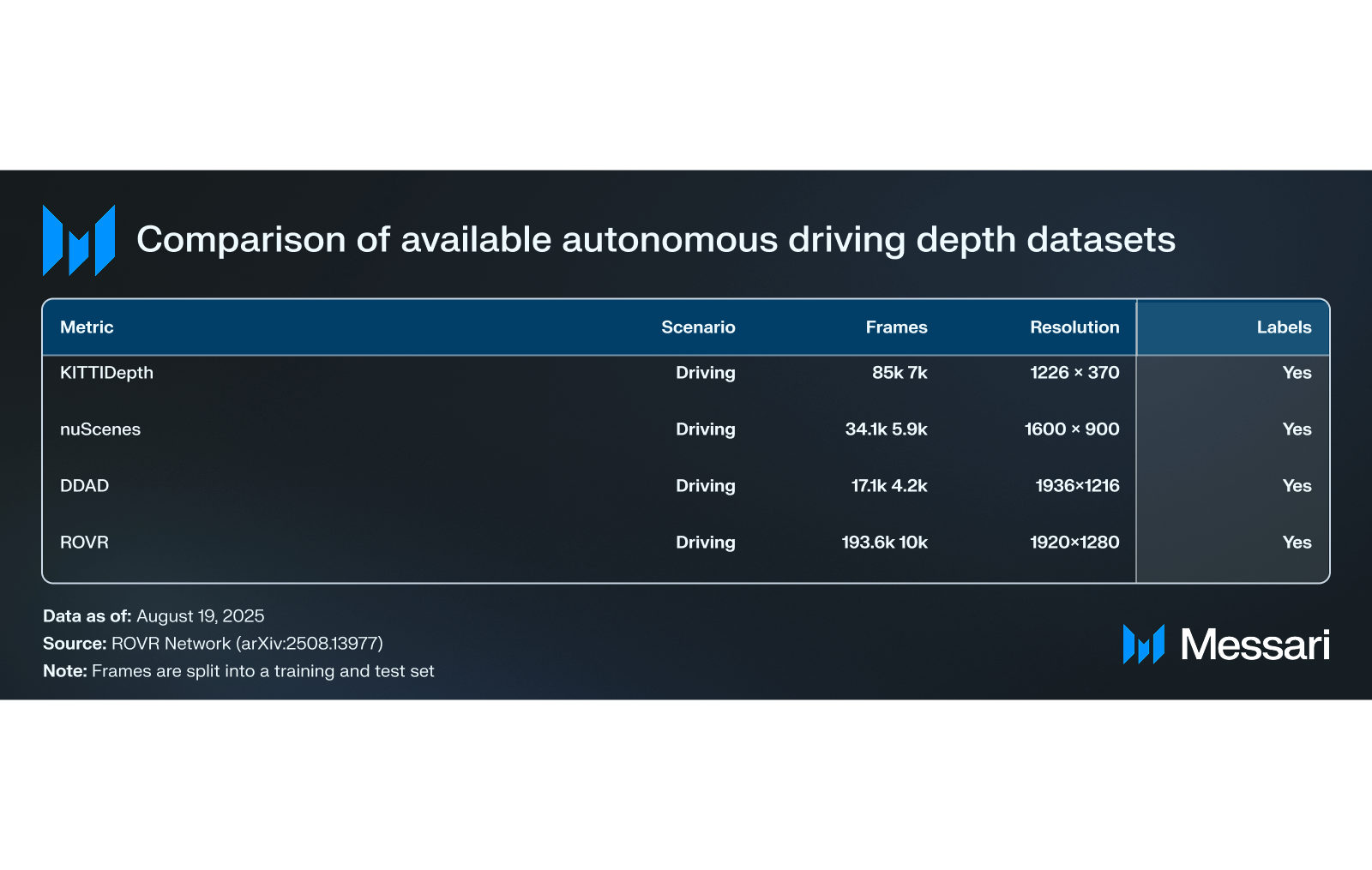

Depth benchmarks come from recorded drives with synchronized camera and LiDAR rigs. They differ in scene coverage, label fidelity, and rig complexity, and those tradeoffs result in varying limitations. KITTI is compact and filmed in a few calm towns, so models tend to memorize those settings. nuScenes spans more locations and weather, but its reference distances can be noisy when sensors are not perfectly aligned. DDAD reaches farther with denser references, but the complex hardware makes large-scale collection costly.

Enter the ROVR Open Dataset

ROVR’s Open Dataset is built to move past those limits by focusing on diverse geographies and conditions. The initial release includes ~200,000 clips (synchronized snapshots combining a camera image, LiDAR-based depth, and the car’s location and direction), with a path to expand to 1 million clips using the same acquisition and processing pipeline. Each sequence, a continuous stretch of a drive recorded as a short clip, comes with clean calibration and per-frame references. This first release focuses on monocular depth, while laying a direct path to object detection and scene labeling. Announced at the ADAS & Autonomous Vehicle Technology Summit, the Open Dataset draws on ROVR’s broader network, spanning 50+ countries and more than 20 million kilometers of real-world driving, to seed a resource for autonomous driving and spatial-AI research.

The dataset is free and open for non-commercial use with attribution. A commercial license is available for companies to use it in products and services, also with attribution.

Data Quality & Collection

ROVR Open Dataset is captured as time-linked video and laser measurements, so models learn depth from motion, not frozen frames. Each sequence bundles synchronized RGB images, LiDAR point clouds, and per-frame vehicle pose, making depth supervision consistent across time rather than inferred from single snapshots.

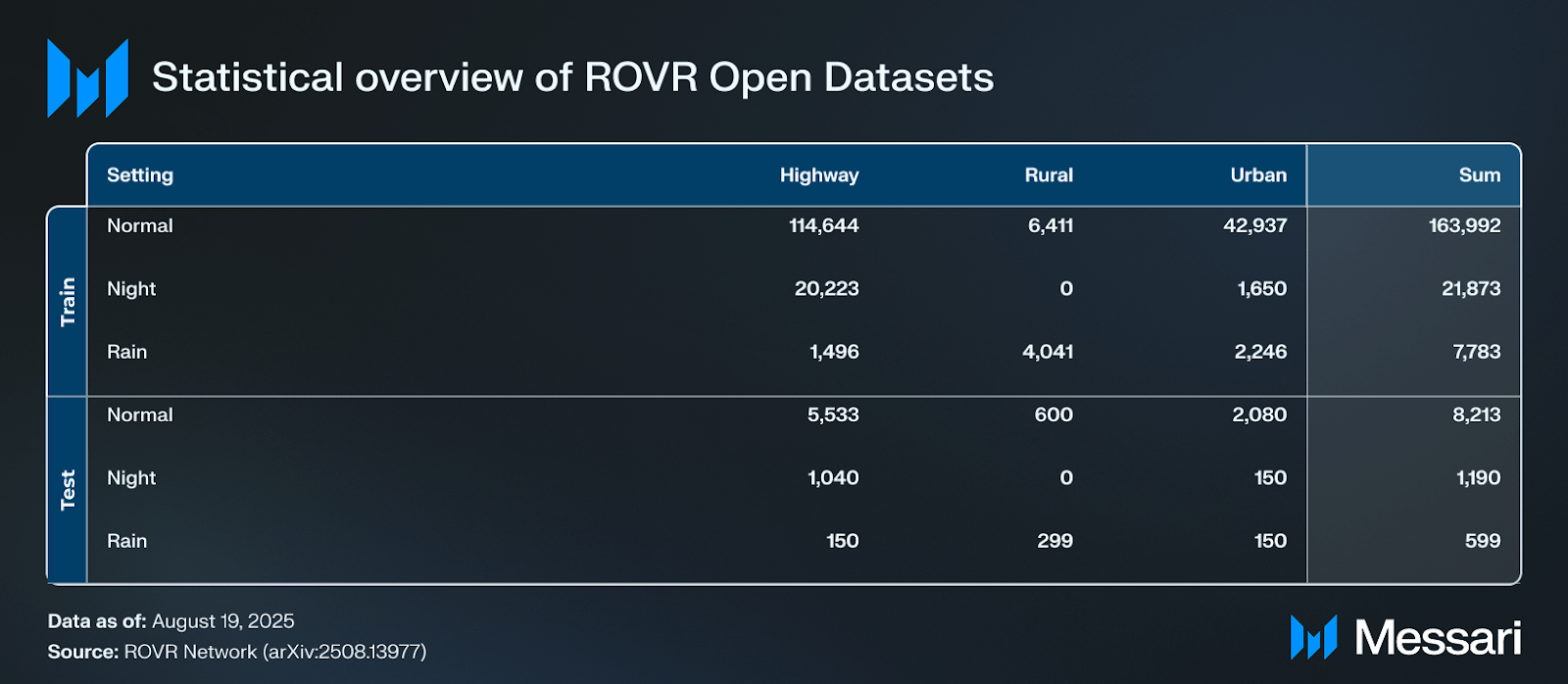

The first release consists of 1,363 clips of roughly thirty seconds each, split into 1,296 training clips and 67 test clips. At five frames per second for both camera and LiDAR, a thirty-second clip yields about 150 paired frames; the training and test splits together include 193,648 and 10,002 clips, respectively. For comprehensive details on data acquisition, refer to Guo et al., ROVR-Open-Dataset: A Large-Scale Depth Dataset for Autonomous Driving.

Files are packaged in a researcher-friendly layout with images at 1920×1080, point clouds in PCD, LiDAR-projected depth maps in single-channel PNG, and navigation tracks (GNSS/INS poses plus 100 Hertz IMU logs). All files carry nanosecond timestamps to keep modalities strictly aligned.

To capture this geospatial data, vehicles use a 126-beam solid-state LiDAR with a 200-meter range and a vertical field of view of ±12.5 degrees, paired with a forward-facing HD RGB camera at 1920×1080. Although the camera can operate at 30 Hz, both the camera and LiDAR are logged at 5 Hz in the dataset to provide matched clips for depth learning. A triple-frequency RTK GNSS receiver, aided by GEODNET corrections, supplies centimeter-level positioning, and an automotive-grade IMU stabilizes orientation and motion estimates. Sensors are hardware-synchronized with GPS-disciplined clocks, so the time offset between modalities stays below 2 ms.

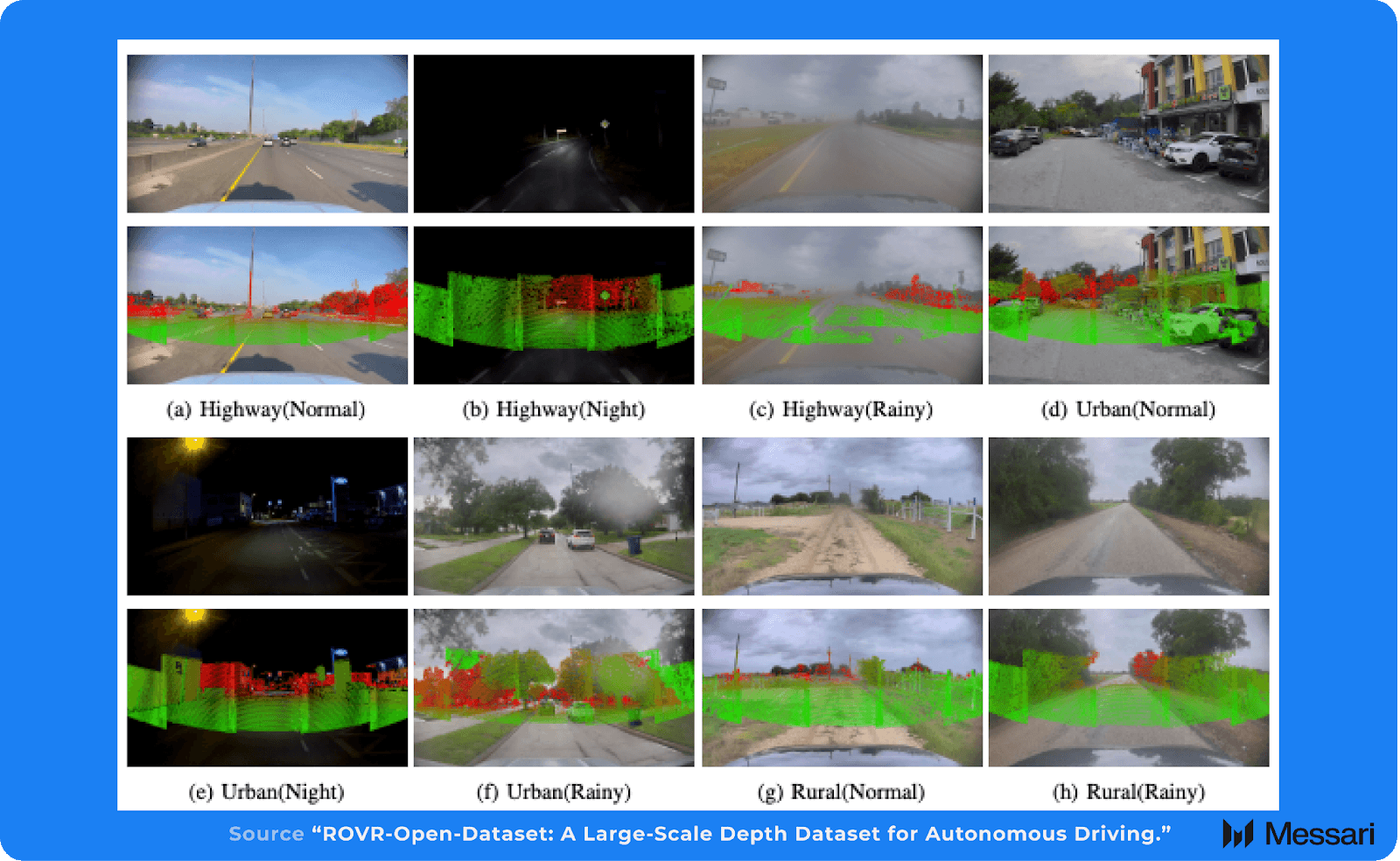

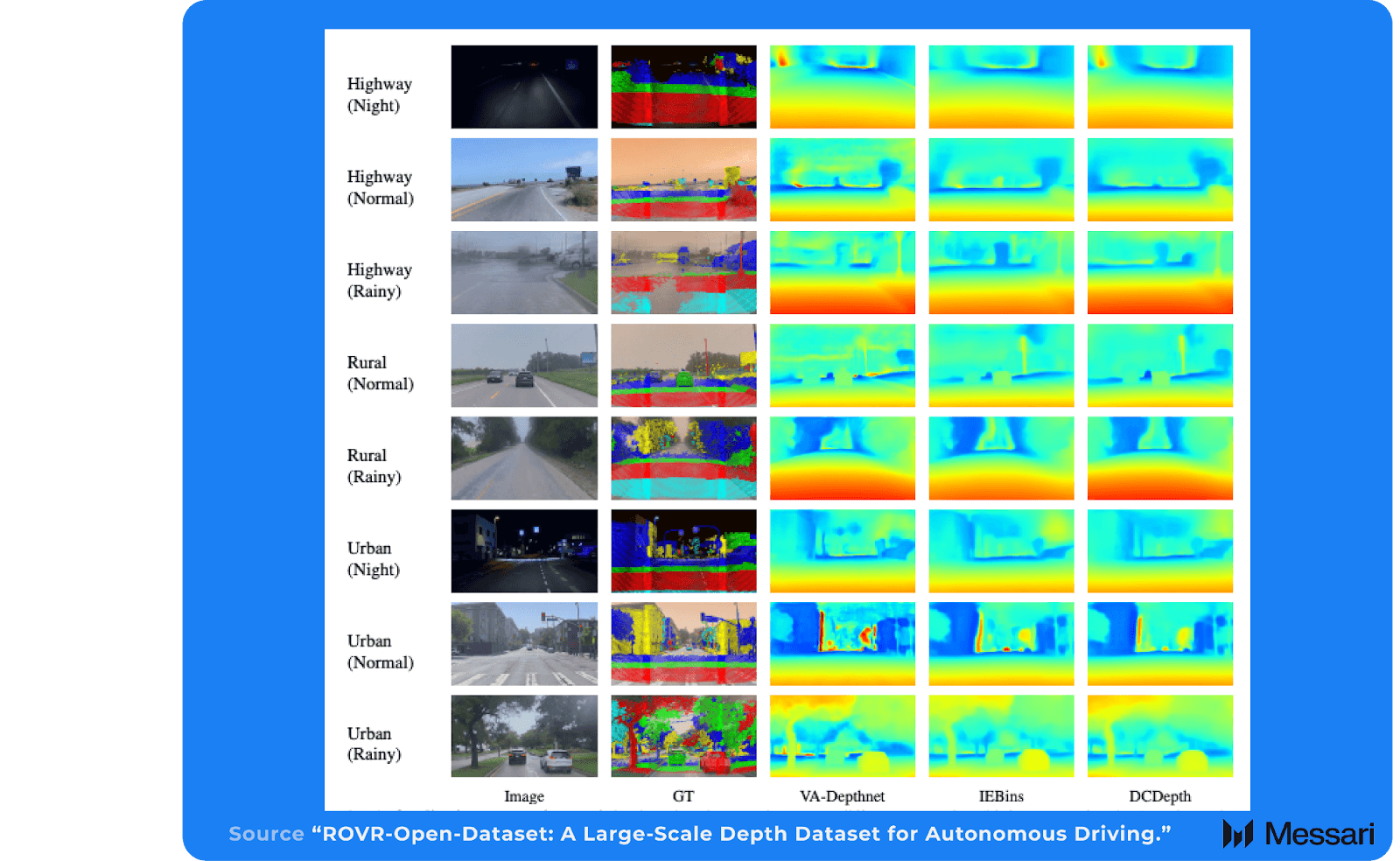

Collection spans North America, Europe, and Asia, with 10,000+ hours across urban, suburban, and highway routes in day, night, and rain. The diverse settings reflect that breadth: highway, urban, and rural scenes appear under normal illumination, low-light, and precipitation.

Model Performance

By Dataset

To establish baselines, the ROVR team first trained VA-DepthNet separately on KITTI, DDAD, and nuScenes and evaluated it both in-domain and on ROVR. Next, ROVR conducted a cross-dataset comparison with VA-DepthNet, DCDepth, and IEBins, showing sharp degradations when trained on KITTI but clear gains when trained on ROVR (Table IV). All three models were trained on ROVR’s unified split and evaluated across illumination conditions and scene types.

ROVR uses two primary statistics to compare depth models:

- Absolute Relative Error (abs_rel) captures the average percentage error in predicted distance; lower values indicate better accuracy. An abs_rel of 0.20 means the model is off by about 20% on average.

- Threshold Accuracy (δ1) reports the share of pixels whose predicted distance is within 25% of the true distance.

A training set teaches the model, and a test set determines whether it learned general rules or memorized the dataset. When both come from the same dataset, the test often shares the same camera rigs, scenes, and labeling artifacts. Models exploit those patterns and look great in-sample while failing to generalize across other datasets.

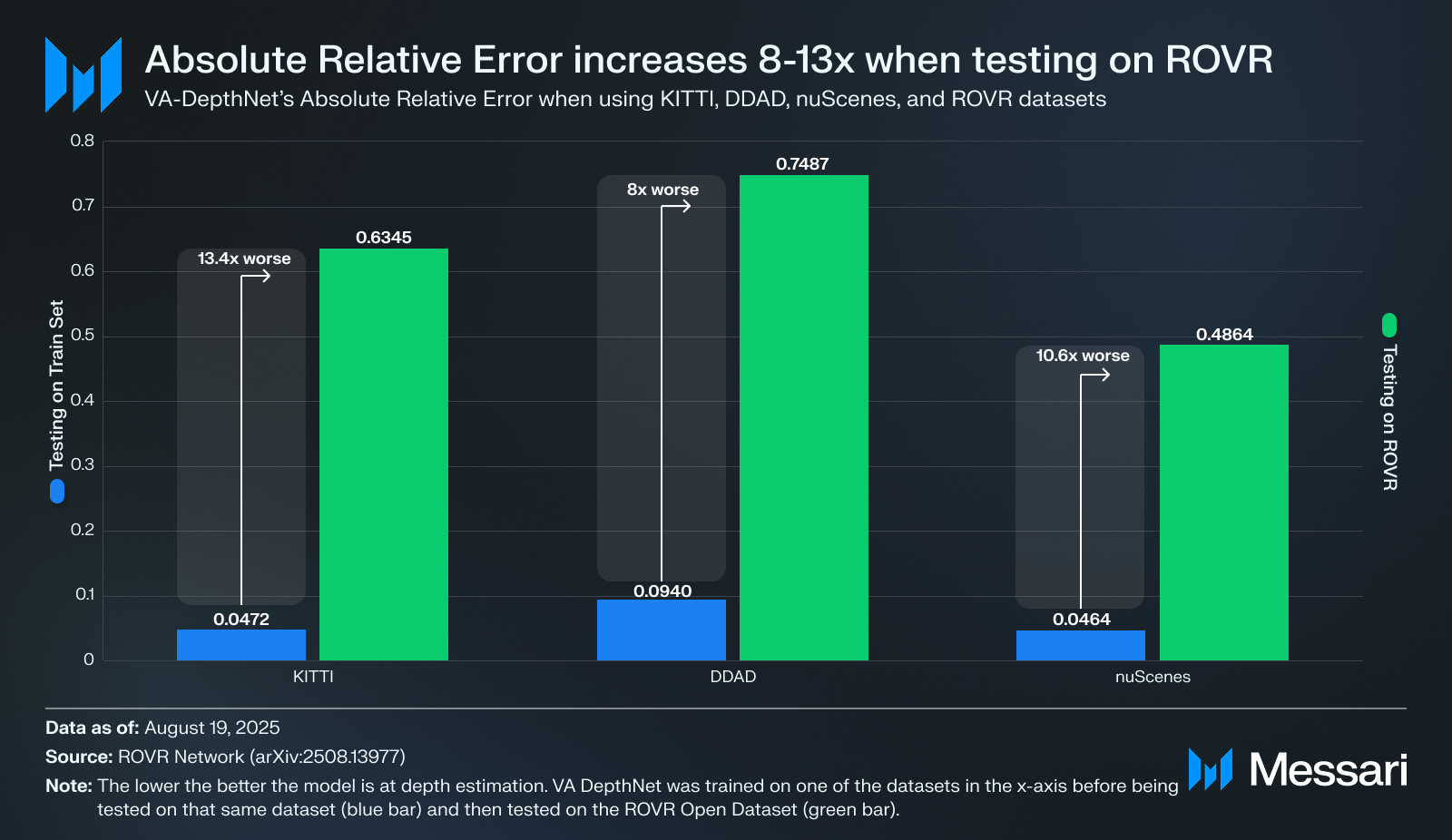

On KITTI, VA-DepthNet had an Absolute Relative Error of 0.047. Test that same model using ROVR’s Open Dataset, and the Absolute Relative Error increases to 0.635 (13.4x worse). DDAD shows the same pattern, rising from 0.094 to 0.749 (8x worse). nuScenes moves from 0.046 to 0.486 (10.6x worse). The gap points to ROVR’s Open Dataset being a more challenging benchmark relative to its peers.

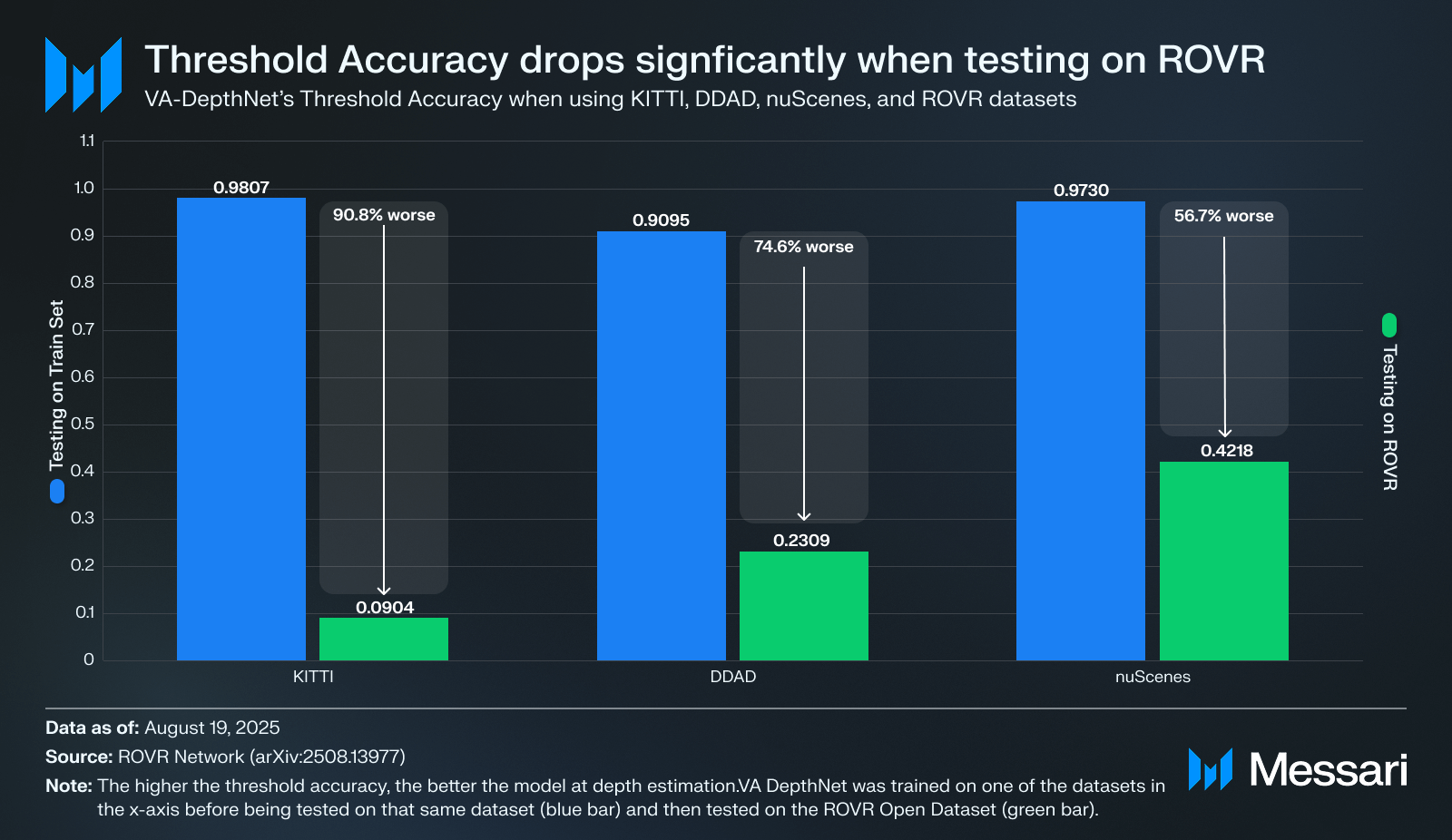

Like with absolute relative error, the same phenomenon applies to threshold accuracy: models appear stronger when testing on the training set. When evaluated on their own train sets, KITTI-trained VA-DepthNet reports 0.981, DDAD reports 0.909, and nuScenes reports 0.973. However, when the exact same models are evaluated on ROVR instead of testing on the train set, VA-DepthNet falls to 0.090 (−89.1 points), DDAD drops to 0.231 (−67.9 points), and nuScenes slides to 0.422 (−55.1 points).

These headline gaps are less meaningful than they might seem at first glance because the baselines are inflated by testing on a train set and because the evaluation scopes differ. Competitors’ high scores when testing on their underlying train set reflect exposure to the same scenes, routes, camera intrinsics, and sensor rigs, artificially boosting threshold accuracy. By contrast, ROVR covers much broader geography and conditions; it mixes highways, dense cities, and rural roads; it spans day, night, and rain; and it uses long-range LiDAR supervision that surfaces errors at distances many models rarely see during training. The sharp drop on ROVR indicates that these models struggle to generalize once the scene distribution shifts beyond the confines of testing on the training set, which is the practical challenge real systems face outside curated environments

Training on ROVR improves the picture but still leaves headroom. With the same setup, VA-DepthNet’s Absolute Relative Error decreases by 69.3% when trained on ROVR instead of KITTI (from 0.5896 to 0.1812). DCDepth shows a 69.6% reduction (from 0.6396 to 0.1943), and IEBins records a 64.7% reduction (from 0.6078 to 0.2146). After training on ROVR, Absolute Relative Error is still between 0.18 and 0.21. Paired with ROVR’s broader coverage, it shows these models do not generalize as cleanly as prior, limited datasets suggested. The ROVR dataset now serves as a strong generalization benchmark to beat. Despite there being no industry standard, many teams aim for lower Absolute Error thresholds by distance and weather.

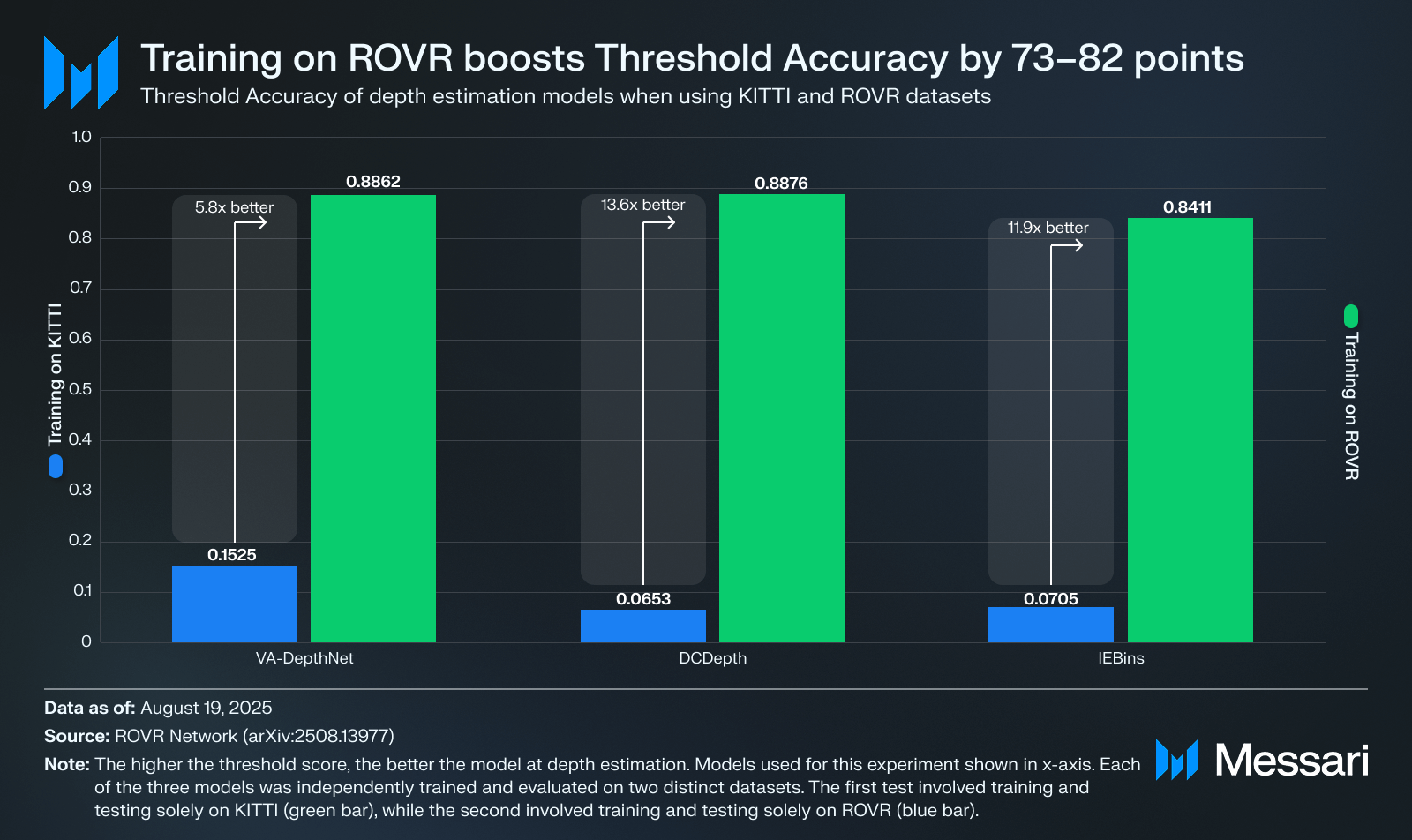

For Threshold Accuracy (δ1), VA-DepthNet improves by 73.4 points when trained on ROVR rather than KITTI (from 0.1525 to 0.8862). DCDepth increases by 82.2 points (from 0.0653 to 0.8876), and IEBins increases by 77.1 points (from 0.0705 to 0.8411). Despite these improvements, δ1 remains in the 0.84 to 0.89 range on ROVR, leaving room for improvement. Since δ1 measures the share of pixels within 25% of depth, 0.84–0.89 means 11–16% of pixels still miss that tolerance on ROVR’s harder scenes. There is no industry standard, but many teams target δ1 ≥ 0.95 and expect stable performance at night and in rain; 1.0 is a theoretical ceiling and not attainable in practice given sensor and label noise.

By Conditions

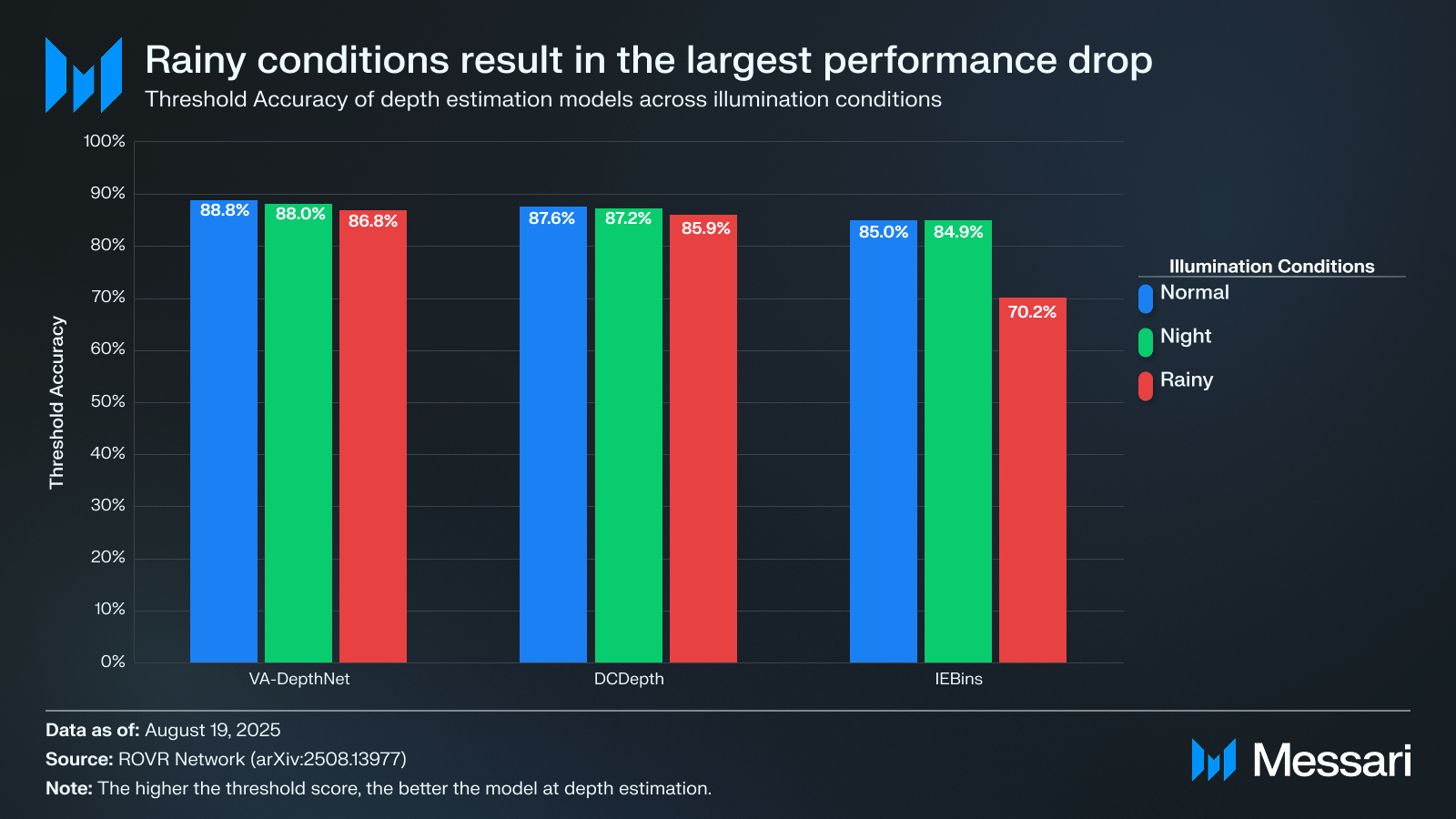

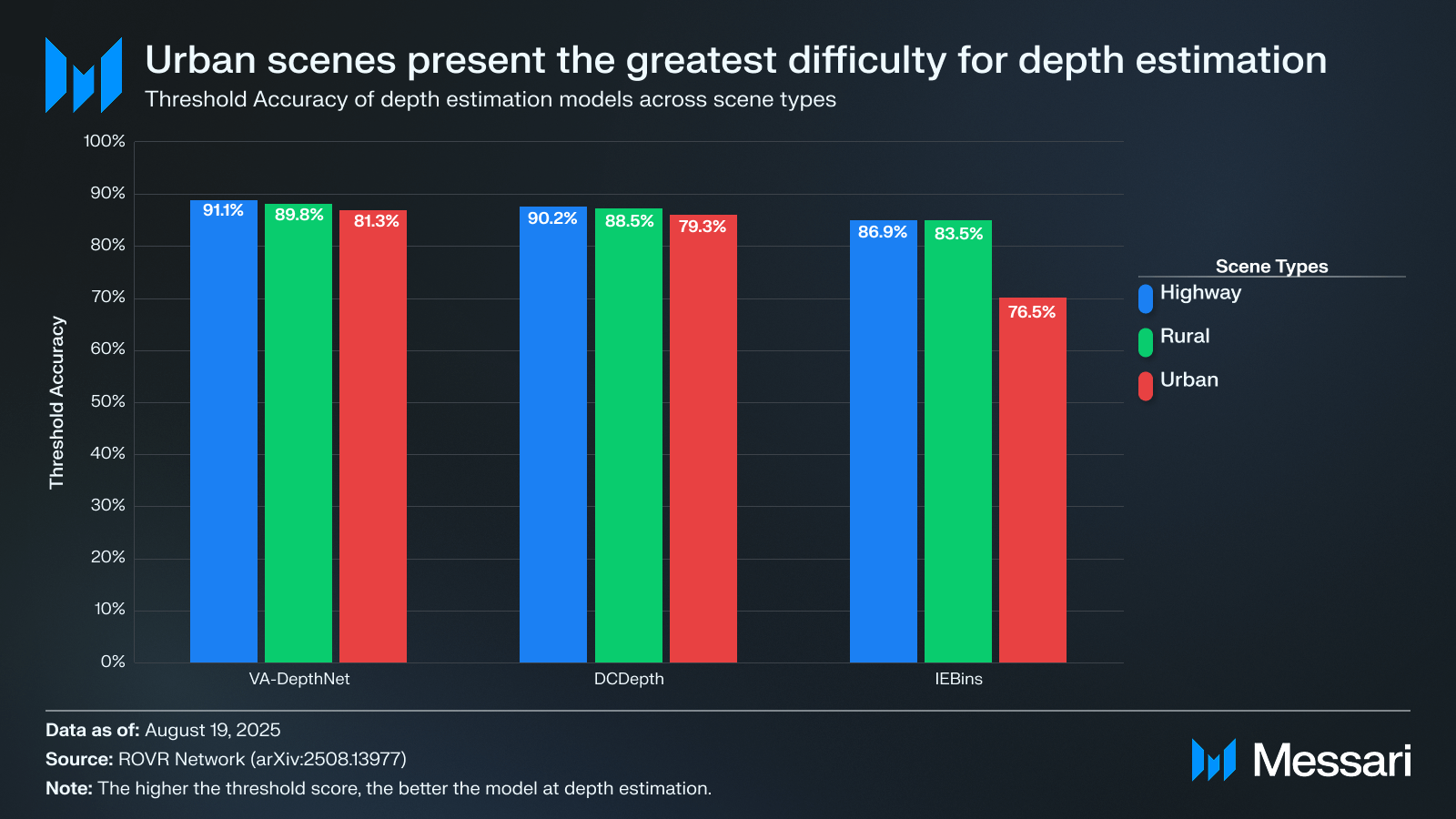

The ROVR team trains each baseline on the same ROVR split and then slices the test set by illumination (Normal, Night, Rainy) and scene type (Highway, Rural, Urban). The results use the same metrics defined earlier.

Across illumination, accuracy falls as conditions get harsher. δ1 slips at night and falls further in rain, even when some error metrics move less or in mixed directions, because the depth range shifts with the scene. For VA-DepthNet, δ1 drops from 88.8% in normal light to 88.0% at night and 86.8% in rain. DCDepth and IEBins show the same pattern. VA-DepthNet is the most stable at night. Rain is the toughest regime for each model. RGB quality degrades and LiDAR becomes sparser, which reduces the quality of the training labels.

To raise δ1 from ~0.7 to 0.9 in rain, you need cleaner labels and weather-aware training. In practice, that means combining and cleaning multiple LiDAR passes to get sturdier depth, using rain-focused augmentation or de-weathering, adding time or radar to fill gaps, and training with objectives that emphasize reliable pixels and penalize big mistakes.

By scene type, highways are easiest, urban streets are hardest, and rural streets are in the middle. On highways, the geometry is simple and occlusions are rare, so δ1 lands around 91.1% for VA-DepthNet, 90.2% for DCDepth, and 86.9% for IEBins. Move to urban scenes and those scores fall to 81.3%, 79.3%, and 76.5%, a drop of 9.7–10.8 points. abs_rel tells the same story: for VA-DepthNet it rises from 0.1476 on highways to 0.2763 in urban, an 87.2% increase. DCDepth climbs 87.5% and IEBins 66.5% on the same comparison. Urban clips stack clutter, occlusions, and multi-scale objects. The models smooth over distant depth, miss thin structures, and lag on objects entering from the periphery.

To lift δ1 from ~0.8 to 0.9+ in urban scenes, you’ll need sharper labels in crowded areas, higher-resolution features for thin and small objects, explicit occlusion and motion handling, longer temporal context, and (where available) fusion with radar or extra cameras to cover the periphery, plus training objectives that curb depth drift at distance and around edges.

To make hard scenarios like rain and urban map as well as easy ones, you need to equalize three things across conditions: signal, supervision, and structure. Raise the signal with HDR imaging, de-weathering, and sensor fusion (radar or multi-camera) so night and rain aren’t starved for information. Supervision can be more dense by accumulating multi-sweep LiDAR, building scene-level reconstructions for dense depth, and targeting data collection at known failure points. Structure can be improved by training on video with multi-view geometry, explicit occlusion and motion reasoning, and longer temporal context. By combining these approaches, threshold accuracy in rainy and urban environments can potentially achieve parity with highway scenes.

ROVR Challenges

The ROVR teams will be hosting several challenges, allowing researchers to develop and benchmark AI models on problems such as:

- Monocular depth estimation: Predict per-pixel distance from a single camera frame, evaluated against LiDAR-projected ground truth.

- Fusion detection and segmentation: Combine camera and LiDAR to detect vehicles and other actors and to assign a class label to each pixel.

These challenges should encourage methods that learn well from sparse LiDAR supervision, hold up in adverse weather conditions, and transfer across highways, cities, and rural roads. This aligns well with the dataset’s stated goal and gives researchers and builders a diverse sandbox to experiment with.

Closing Summary

ROVR turns scarce geospatial data into a shared resource. The dataset spans highways, cities, and rural roads across day, night, and rain, and is scaling toward ~1M clips. ROVR will host global challenges in monocular depth and fusion detection/segmentation, creating a single comparable leaderboard and a straightforward way to refresh results as coverage expands.

The benchmark evaluations support the use of more diverse data. Models that look great on KITTI, DDAD, or nuScenes underperform when tested on ROVR. Training on ROVR narrows the gap but still leaves room for improvement, with Absolute Relative Error around 0.18–0.21 and Threshold Accuracy in the 0.84–0.89 range.

ROVR’s edge lies in its accessible hardware and DePIN network. Synchronized camera and LiDAR, consistent capture, and a global contributor base already logging tens of millions of kilometers create a flywheel where broader coverage improves models, better models produce richer HD maps, and those maps, in turn, fuel demand for the network.

Let us know what you loved about the report, what may be missing, or share any other feedback by filling out this short form. All responses are subject to our Privacy Policy and Terms of Service.

This report was commissioned by ROVR Network. All content was produced independently by the author(s) and does not necessarily reflect the opinions of Messari, Inc. or the organization that requested the report. The commissioning organization may have input on the content of the report, but Messari maintains editorial control over the final report to retain data accuracy and objectivity. Author(s) may hold cryptocurrencies named in this report. This report is meant for informational purposes only. It is not meant to serve as investment advice. You should conduct your own research and consult an independent financial, tax, or legal advisor before making any investment decisions. Past performance of any asset is not indicative of future results. Please see our Terms of Service for more information.

No part of this report may be (a) copied, photocopied, duplicated in any form by any means or (b) redistributed without the prior written consent of Messari®.