Key Insights

- io.net is a decentralized computing network aggregating underutilized enterprise-grade GPUs to provide scalable, cost-efficient AI and machine learning compute power.

- Core technology integrates Ray-based distributed computing for task orchestration, reverse tunnels for secure remote access, and mesh VPNs for decentralized connectivity, ensuring scalability, fault tolerance, and efficient AI/ML workload execution.

- The network is secured through Proof-of-Work (PoW) and Proof of Time-Lock (PoTL), along with a staking-based model that penalizes underperforming or malicious nodes.

- io.net has partnered with Allora Network, GAIB, Oortech, FrodoBots, ChainGPT, and Phala Network, among others.

Introduction

The artificial intelligence (AI) market, valued at ~$184 billion in 2024, is projected to reach ~$826 billion by 2030. However, it faces multiple bottlenecks, one of which is compute power. The rising demand for training large-scale AI models has driven graphics processing unit (GPU) shortages, causing prices for high-performance chips like NVIDIA’s H100 to reach $40,000 per unit, making it difficult for companies to access scalable and affordable computing resources.

io.net addresses these challenges through a decentralized computing network, aggregating underutilized enterprise-grade GPUs across six continents and 50+ countries to provide on-demand, scalable, and cost-efficient AI computing infrastructure. Specifically, its framework uses Ray-based distributed computing to optimize clustering, task orchestration, and parallelized workloads.

The io.net ecosystem includes (i) IO Cloud, a decentralized GPU marketplace that allows dynamic workload scaling for AI/ML applications; (ii) IO Intelligence, an AI infrastructure platform offering pre-trained models and AI agents; (iii) IO Worker, an interface for GPU providers to contribute resources and manage their earnings; (iv) IO Staking, a platform for tracking and managing co-staking allocations within the network; (v) IO Explorer, a monitoring tool that provides real-time insights into compute usage, performance metrics, and network activity for the entire Internet of GPUs (IOG) network; and (vi) IO ID, a platform for tracking earnings, monitoring balance updates, and withdrawing funds in.

Website / X (Twitter) / Discord

Background

io.net (IO), previously known as ANTBIT, was founded in 2022 and is a decentralized network of GPU and central processing units (CPU) designed to provide accessible, scalable, and efficient access to compute resources. The project’s decentralized physical infrastructure network (DePIN) offers suppliers and buyers increased flexibility and control of compute resources.

The project team includes Gaurav Sharma (Chief Executive Officer and Chief Technology Officer), previously at Binance; Basem Oubah (Co-Founder); Saad Alenezi (Co-Founder); Tory Green (Co-Founder), previously at Oaktree; Maher Jilani (Chief Experience Officer), previously at Capital One. Since its inception, the team has raised ~30$ million from investors (e.g., Hack VC, Solana Labs, and OKX Ventures).

Technology

Io.net is built on a decentralized computing infrastructure designed to efficiently aggregate and distribute GPU resources for high-performance computing tasks. Specifically, it uses Ray-based distributed computing to optimize clustering, task orchestration, and parallelized workloads. The network is structured around two core parts: (i) the GPU and CPU suppliers and (ii) the orchestration and scheduling of those suppliers.

GPU and CPU Suppliers

Compute providers supply GPU and CPU resources to the IOG Network, forming the backbone of the network’s distributed computing infrastructure. These resources come from independent data centers, crypto miners, and underutilized cloud capacity (e.g., Render and Filecoin).

Before any workloads and IO rewards are distributed, devices must (i) meet the minimum system requirements, (ii) pass the Proof-of-Work and Proof of Time-Lock verifications, (iii) stake a formulated amount of IO tokens, and (iv) if it’s the user’s first time onboarding, wait 12 hours – during which the GPUs, network, and system configuration are stress-tested to ensure they can perform optimally under production workloads.

The most common workloads for suppliers are (i) batch Inference and model serving, (ii) parallel training, (ii) parallel hyperparameter tuning, and (iii) reinforcement learning.

Verification & Proof Mechanisms

To maintain security, reliability, and fair resource allocation, io.net implements two proofs (i.e., Proof-of-Work and Proof of Time-Lock) that confirm the GPU and CPU resources are legitimate and function as advertised by the consumer.

Proof-of-Work (PoW)

The first proof, Proof-of-Work (PoW), which runs hourly and verifies compute legitimacy and performance. This system consists of three components working together: (i) the Binary Checker API, which verifies whether a solution meets the puzzle’s requirements; (ii) the Challenges API, which generates puzzles that require finding a number matching a specific pattern; and (iii) the Results Submission API, which submits the solution for validation to confirm its correctness.

Notably, while PoW is required for most suppliers to qualify for block rewards, there are two exceptions where PoW is not executed: (i) when a worker is nominated for a block reward, but is hired by a customer during the evaluation hour, exempting it from PoW verification to prevent job interruptions while still monitoring uptime to ensure eligibility for rewards, and (ii) head nodes that must always be available to manage jobs, exempting them from PoW while instead undergoing uptime verification via PoTL to qualify for rewards.

Proof of Time-Lock (PoTL)

The second proof is Proof of Time-Lock (PoTL), which verifies that compute resources remain fully dedicated to assigned workloads throughout their rental period. PoTL works by tracking resource usage from the start of the rental period to the end to confirm that the GPU has not been accessed by any other processes that could diminish compute performance. This proof consists of multiple verification steps, including (i) monitoring consumption metrics, (ii) tracking container/compute activity, (iii) eliminating unauthorized background processes, and (iv) enforcing a rewards and penalty system to maintain compliance.

Ultimately, compute providers that fail the PoW verification or have compromised computational capacity (i.e., fail PoTL) while connected to the platform may be tagged accordingly and excluded from block rewards and job assignments.

Notably, a minimum uptime requirement of five hours must also be met to maintain eligibility.

Staking Mechanisms & Incentives

As mentioned before, suppliers must stake IO tokens to qualify for block rewards and job assignments. The required stake is based on each supplier’s capacity and contribution, with a base stake of 200 IO per chip, adjusted by an earning multiplier predefined for each GPU/CPU model. The exact staking formula can be found here.

Furthermore, when unstaking, a 14-day cooldown period applies, during which tokens do not count toward block rewards. After this, they must be withdrawn before restaking.

Lastly, a slashing mechanism penalizes malicious or underperforming suppliers. Slashed tokens undergo a one-month appeal process before being burned if the appeal is unsuccessful.

Pricing Model and GPU Selection Criteria

Pricing on io.net is automatically determined based on supply and demand. Several factors influence the pricing, including (i) GPU specifications, (ii) connectivity speed, and (iii) security and compliance certifications. Users, on the other hand, can filter and select GPUs based on various parameters, including (i) supplier (e.g., io.net or render), (ii) security compliance (e.g., SOC-2), (iii) location, (iv) connectivity tier/speed, (v) cluster processor, (vi) cluster base image, and (vii) master configuration (e.g., head node specification).

Reward Calculation and Distribution

Block rewards are distributed in IO tokens hourly, following a predetermined emission schedule. The current allocation is 95% for GPUs and 5% for CPUs. Furthermore, supplier earnings are determined by a scoring system, which evaluates performance based on a device base score. This score incorporates various metrics (e.g., connectivity, hardware specifications, and reliability). The device normalized score represents a device’s relative earning potential within the network, calculated based on the total available emissions per block and the scores of all eligible devices. The IOG Foundation may modify the scoring formula and allocation ratio in the future.

Other incentives have included the three seasons of Ignition Rewards Programs, which distributed rewards through (i) Worker Rewards, where suppliers earned points based on job hours completed, GPU model, bandwidth, and uptime; (ii) Community Questing Rewards, facilitated via GALXE, where users earned rewards by completing engagement tasks; and (iii) Discord Role Rewards, which awarded contributors based on participation in community bounties, content creation, and other activities. More details can be found here.

Task Orchestration & Scheduling

The diagram above illustrates io.net’s distributed computing architecture, built on a fork of Ray.io for parallel task execution. The system consists of a head node, which manages workload distribution and metadata, and multiple worker nodes that execute tasks. Key components of the head node include (i) a driver for task submission, (ii) a scheduler for workload assignment, (iii) an object store for data sharing, and (iv) a global control store (GCS) for managing metadata.

While the head node orchestrates workloads, it currently acts as a single point of failure—if it goes offline, the cluster stops functioning. However, it can detect worker node failures and reassign tasks to healthy nodes. To improve reliability, io.net plans to distribute head nodes across secure partner data centers.

With this system and additional technical enhancements, io.net optimizes resource allocation by dynamically assigning GPU workloads based on availability and performance. Autoscaling adjusts usage as demand fluctuates, while fault tolerance and graph-based scheduling ensure efficient workload distribution, minimizing bottlenecks and improving performance.

IO Tunnels

The first of the above-mentioned technical enhancements is the use of reverse tunnels, a technique that bypasses firewall and NAT restrictions, improving network reliability and accessibility. Specifically, a reverse tunnel establishes a secure connection from a client to a remote server by opening an inbound connection on the server side. This is the opposite of a conventional forward tunnel, where a client opens a connection to the server.

Reverse tunnels simplify access to io.net miners and eliminate the need for complex network configurations. This allows the IOG Network to (i) enable engineers to access workers without concerns about network restrictions, port forwarding, or VPNs; (ii) establish secure communication channels between devices; (iii) manage multiple workers simultaneously; and (iv) ensure compatibility across different operating systems and environments.

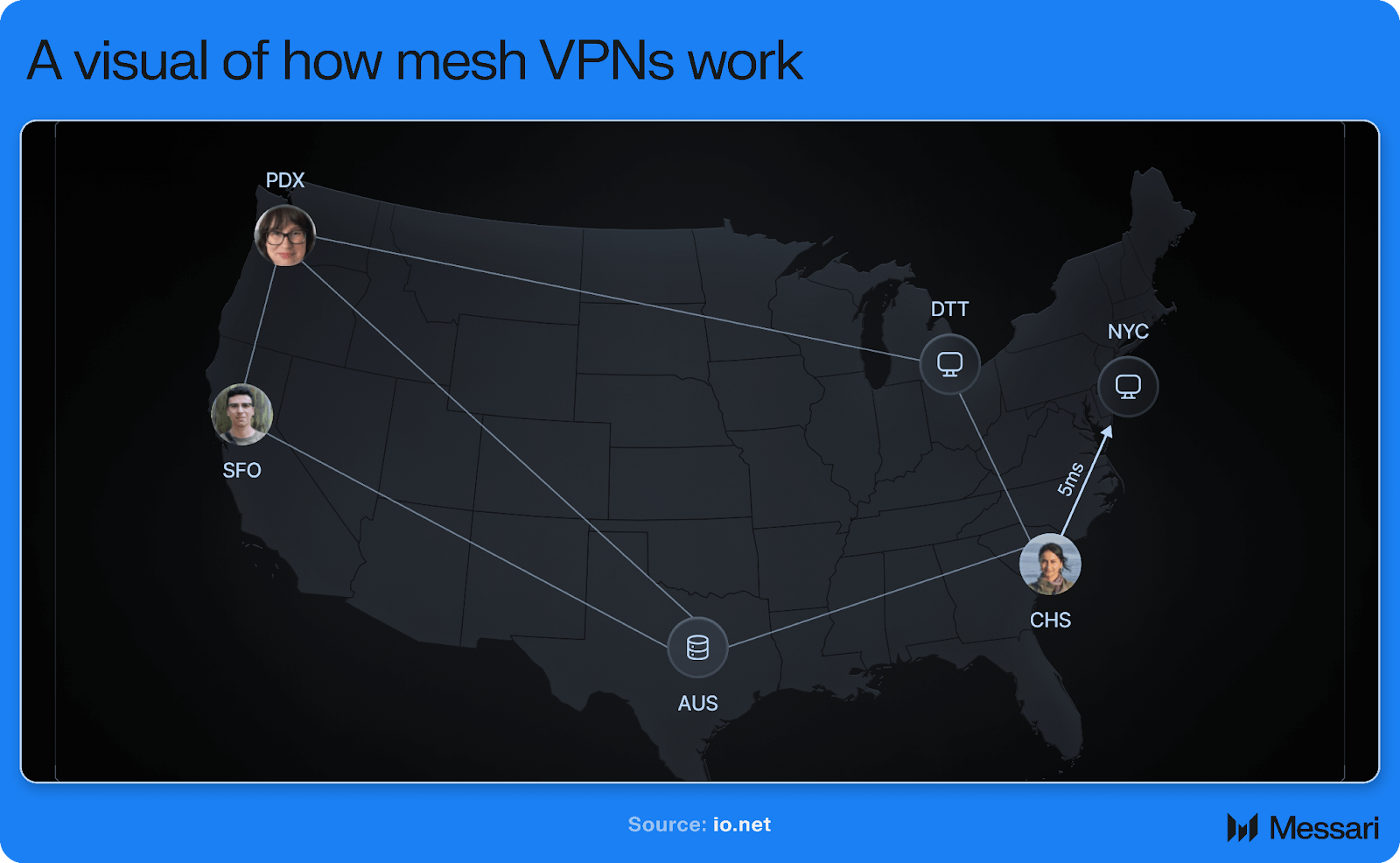

Mesh VPN

Another technical enhancement is mesh VPNs, a virtual private network (VPN) that connects nodes in a decentralized manner. Unlike traditional VPN architectures, which rely on a central concentrator or gateway, mesh VPNs allow each node to connect directly to every other node in the network. This ensures data packets travel along multiple paths, improving redundancy, fault tolerance, and load distribution while making it harder for attackers to trace origins or destinations. Additionally, packet padding and timing obfuscation protect against traffic analysis, making mesh VPNs a secure solution for decentralized networking.

Putting It All Together

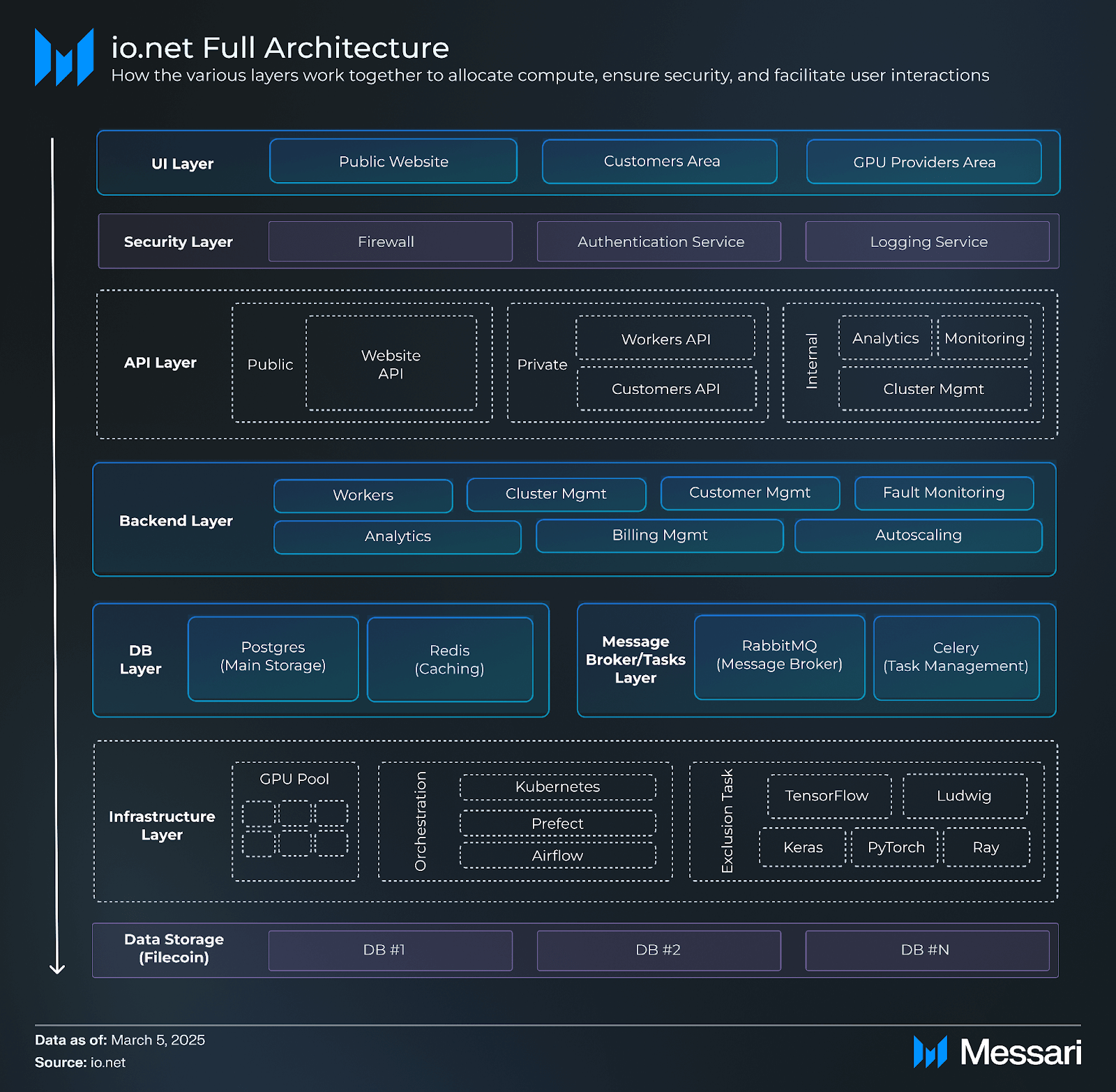

While the components above serve as the backbone of the infrastructure, they operate within a broader architecture comprising the (i) UI Layer, (ii) Security Layer, (iii) API Layer, (iv) Backend Layer, (v) Database Layer, (vi) Message Broker and Task Layer, (vii) Infrastructure Layer, and (viii) Data Storage Layer. Together, these layers facilitate seamless interaction between users, GPU providers, and system processes.

The following is an example of how each layer works together when a user wants to purchase compute power (a worker) on io.net Cloud.

- The UI Layer provides a seamless interface for selecting a GPU, setting parameters, and purchasing.

- The API Layer sends the request to the backend, which checks availability and assigns the job through the task orchestration system.

- The Security Layer verifies authentication and ensures a secure transaction.

- The Database Layer logs the transaction and maintains user and system records.

- Once assigned, the Infrastructure Layer provisions the selected worker from the GPU pool.

- The Message Broker and Task Layer coordinate job execution, while the Data Storage Layer ensures the job has the required datasets.

- The assigned worker node processes the AI/ML job; once completed, results are stored and made accessible to the user.

Io.net also created a suite of products to enhance the ecosystem’s utility, as mentioned in this report’s “Introduction” section. Here are a list of the products and how consumers can use them:

- IO Cloud: A decentralized GPU marketplace that provides scalable, on-demand compute for AI and ML workloads. Customers can rent automatically priced GPUs, which can further be categorized into the following types of clusters:

- Ray Cluster: A distributed computing environment powered by the Ray framework, enabling scalable and efficient task execution across multiple nodes.

- Mega-Ray Cluster: An enhanced Ray-based cluster offering dynamic GPU/CPU resource allocation with greater flexibility and scalability.

- To Be Released: Kubernetes, Bare Metal, Ludwig, Ray LLM, PyTorch FSDP, IO Native App, Unreal Engine 5, and Unity Streaming.

- IO Intelligence: An AI infrastructure platform offering pre-trained models, intelligent agents, and real-time analytics.

- IO Worker: An interface for GPU providers to contribute resources, manage earnings, and monitor device performance.

- IO Staking: A platform for tracking and managing co-staking allocations within the network.

- IO Explorer: A monitoring tool that provides real-time insights into compute usage, performance metrics, and network activity for the entire IOG Network.

- IO ID: A platform for tracking earnings, monitoring balance updates, and withdrawing funds.

IO Token

Token Functions

As the project’s documentation outlines, IO runs as an SPL token on Solana. It serves several key functions within the IOG Network, including:

- Paying for services (e.g., GPU and CPUs).

- Payments can be made in fiat or USDC but are converted to IO. A 2% facilitation fee is waived for direct IO payments.

- GPU renters and suppliers pay a 0.25% reservation fee.

- Staking to support network operations and earn staking rewards.

- GPU suppliers and node operators must stake a formulated amount of IO to qualify for Proof of Time-Lock (PoTL), earning hourly block rewards while facing penalties for uptime failures and fraudulent activity.

- Io.net allows multiple participants to co-stake IO, supporting GPU suppliers and earning shared rewards.

- Participating in the project’s governance process (yet to be launched).

Tokenomics

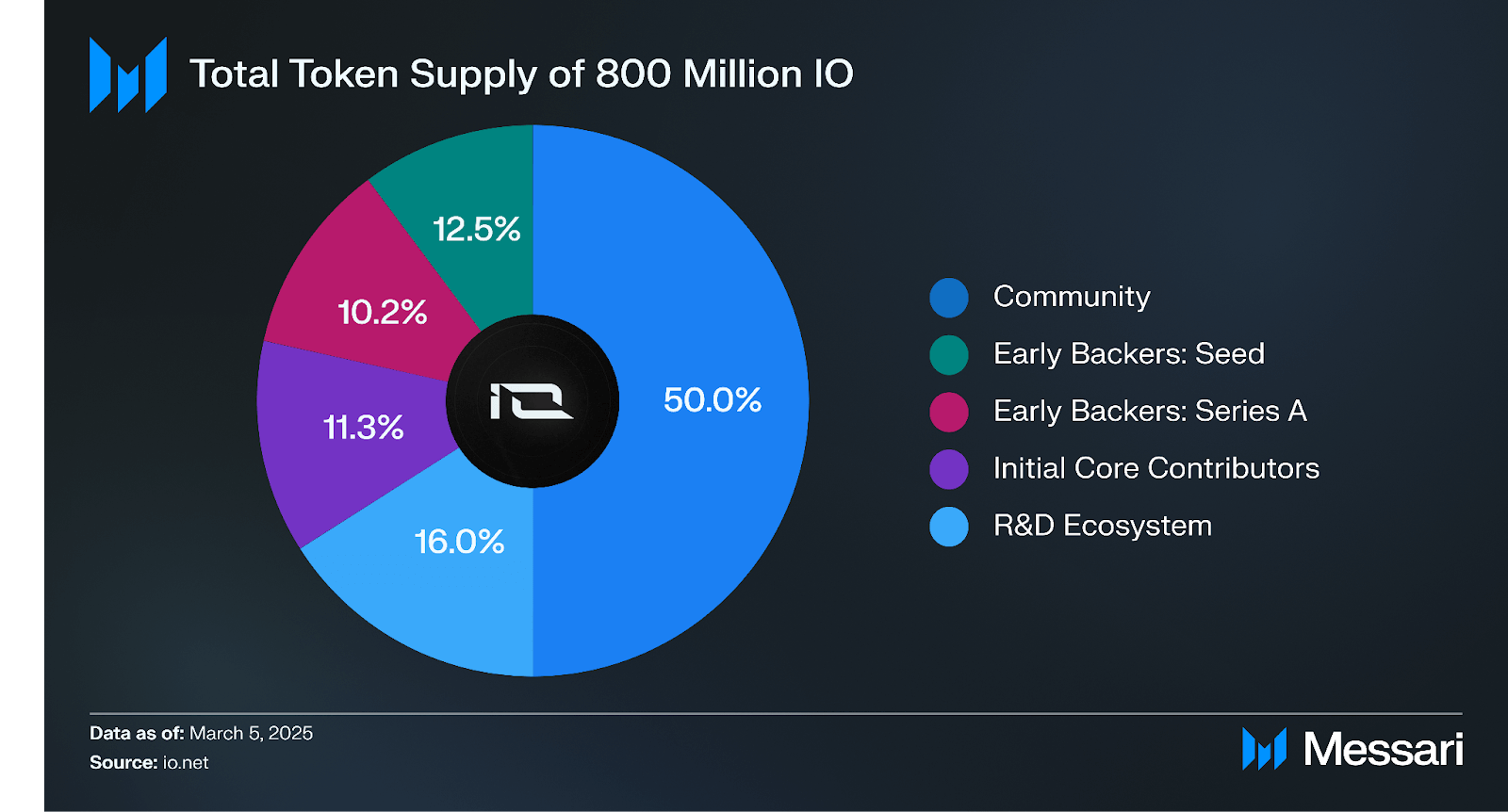

The pie chart above depicts the following IO token allocations (valued at ~$856 million as of March 5, 2025, with IO priced at ~$1.07):

- Community: 50.00% (400 million IO, ~$428 million)

- R&D Ecosystem: 16.00% (128 million IO, ~$137 million)

- Early Backers: Seed: 12.50% (100 million IO, ~$107 million)

- Initial Core Contributors: 11.30% (90.4 million IO, ~$96.7 million)

- Early Backers: Series A: 10.20% (81.6 million IO, ~$87.3 million)

Additionally, io.net’s compute reward emissions follow a structured disinflationary schedule. Specifically, the emission model transitions hourly to monthly, reducing inflation over 20 years while maintaining the total supply cap of 800 million IO. Alongside this, IO employs a programmatic burn mechanism using revenues from the IOG Network to purchase and burn IO.

Token Vesting

Vesting schedules for the parties mentioned above include:

- All Early Backers: Tokens unlock after a 12-month cliff, then vest linearly over 24 months (three-year total vesting period).

- Initial Core Contributors: Tokens unlock after a 12-month cliff, then vest linearly over 36 months (four-year total vesting period).

Governance

io.net’s governance framework will be centered on staking-based participation. A commercially reasonable search found no formal community governance system.

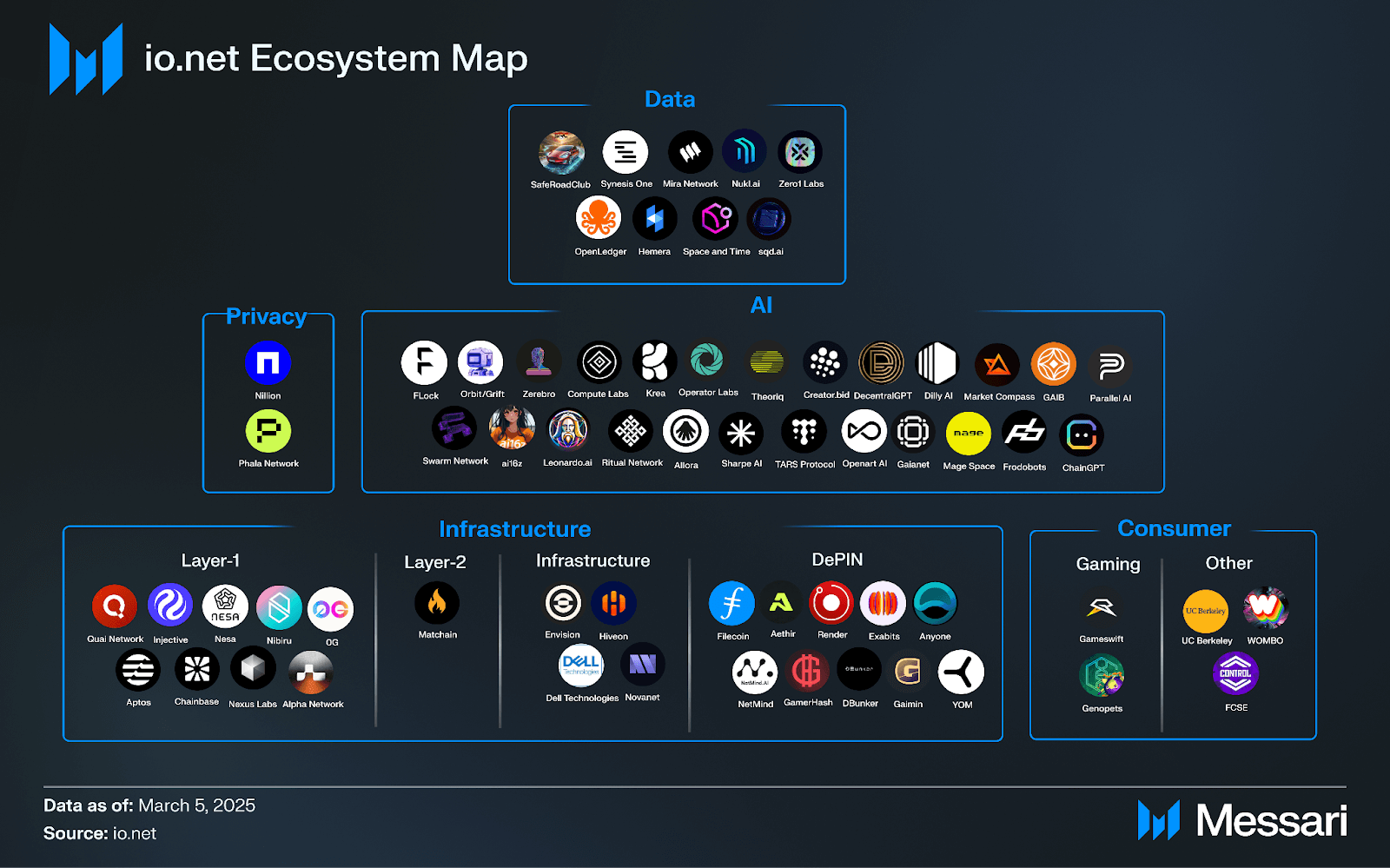

State of the io.net Ecosystem

Partners and Projects

Key projects that highlight the variety of benefits io.net provides:

- Nillion: A privacy-preserving computation network that integrated io.net’s GPU clusters to scale blind computation and decentralized AI inference.

- Dell Technologies: A global technology leader that welcomed io.net into its Partner Program, enabling decentralized GPU computing for enterprise AI, ML, and HPC workloads.

- Theoriq: An AI agent research platform leveraging io.net’s decentralized compute for large-scale machine learning and data-driven modeling.

- Phala Network: A blockchain-TEE hybrid platform enabling secure offchain AI computation controlled by onchain smart contracts, integrating io.net’s decentralized GPU infrastructure.

Recent partnerships and integrations include:

- March 7, 2025: Allora Network partnered with io.net to scale its Inference Synthesis mechanism using high-performance decentralized compute.

- March 6, 2025: GAIB integrated tokenized AI infrastructure with io.net’s decentralized GPU compute, granting io.net access to its first H200 GPUs.

- March 6, 2025: Oortech partnered with io.net to leverage decentralized GPU compute for its decentralized AI infrastructure.

- March 4, 2025: FrodoBots partnered with io.net to support AI research in urban navigation, using io.net’s decentralized GPU network to handle the computational demands of FrodoBots’ 2K dataset.

- Feb. 27, 2025: ChainGPT partnered with io.net to enhance smart contract analytics and AI-driven blockchain intelligence by leveraging decentralized GPU compute.

Roadmap

On Jan. 28, 2025, io.net released a Q1 2025 roadmap. Going forward, these quarterly roadmaps will be shared publicly via the project’s official website and X (Twitter) account. The contents of the latest roadmap, slightly modified by the Team on May 1, 2025, include:

- IO Intelligence: An AI infrastructure and API platform that allows users to access and integrate pre-trained open-source models and custom AI agents into their applications via API calls.

- BC8 V2.0: Flagship consumer-facing image and video generation tool supporting up to 10 custom models. A Web3-native alternative showcasing in-house AI dev capability.

- Search & Deploy: A feature allowing users to efficiently find and deploy compute resources for AI and ML workloads.

- Super Aggregator: A tool that aggregates compute supply from various providers, optimizing cost, availability, and performance for users.

- Container As A Service: A service providing containerized compute environments, streamlining deployment and management for developers.

- Baremetal On Platform: Direct access to bare-metal GPU infrastructure for high-performance computing without virtualization overhead.

- Kubernetes Clusters: Native Kubernetes cluster support for improved orchestration and scaling of AI workloads.

- Worker UI Upgrade: Enhancements to the Worker interface.

- IO Ecosystem: Improvements to the rest of the ecosystem including IO Cloud.

- IO Agents: Personalized, task-performing agents (e.g., social media posting, summarization, and data pull) designed to support creators and automation use cases.

Additionally, the MeshVPN documentation outlines planned future improvements, including:

- MeshVPN Access Control Lists (ACLs): Nodes should define and enforce ACLs to restrict communication between specific nodes or groups of nodes.

- Regular MeshVPN Auditing and Logging: Configuration to allow regular audits and maintain logs of network activities.

Closing Summary

Io.net is a decentralized computing network designed to address the growing demand for high-performance AI and machine learning workloads by aggregating underutilized GPUs from independent data centers, crypto miners, and cloud providers. By leveraging Ray-based distributed computing, io.net optimizes clustering, task orchestration, and parallelized workloads, enabling scalable and cost-efficient compute access. The project’s ecosystem comprises IO Cloud, a decentralized GPU marketplace; IO Intelligence, an AI infrastructure and API platform; IO Worker, an interface for GPU suppliers; IO Staking, a co-staking and reward tracking system; IO Explorer, a real-time network monitoring tool; and IO ID, a financial tracking solution for earnings and withdrawals.

Backed by strategic partners (e.g., Allora, GAIB, Oortech, FrodoBots, and ChainGPT), io.net’s network now spans 7,000+ GPUs across 50+ countries. io.net is positioning itself as a leading DePIN through its rapidly scaling network and strategic developments.

Let us know what you loved about the report, what may be missing, or share any other feedback by filling out this short form. All responses are subject to our Privacy Policy and Terms of Service.

This report was commissioned by io.net. All content was produced independently by the author(s) and does not necessarily reflect the opinions of Messari, Inc. or the organization that requested the report. The commissioning organization may have input on the content of the report, but Messari maintains editorial control over the final report to retain data accuracy and objectivity. Author(s) may hold cryptocurrencies named in this report. This report is meant for informational purposes only. It is not meant to serve as investment advice. You should conduct your own research and consult an independent financial, tax, or legal advisor before making any investment decisions. Past performance of any asset is not indicative of future results. Please see our Terms of Service for more information.

No part of this report may be (a) copied, photocopied, duplicated in any form by any means or (b) redistributed without the prior written consent of Messari®.